Viral Deception: Why fake stories on reels and TikTok spread so quickly and how to spot them

Has a gripping video ever evoked strong emotions? That may be precisely the reaction the creator intended. The constant flood of short videos often doesn’t give our critical thinking enough time to engage. However, a few simple steps can help you verify the information’s accuracy before sharing it.

The short video feed format is highly engaging, contributing to its immense popularity. This format was pioneered by TikTok, which, according to Statista, reached 1.59 billion monthly users in 2025. Today, this trending format is available on numerous other platforms and social networks, with YouTube Shorts and Instagram Reels being among the most prominent examples. Yet, this very popularity and reach also make short videos a convenient tool for rapidly disseminating false information. Therefore, to combat fakes effectively, it is crucial to understand the algorithms that drive their viral spread and to master specialized verification techniques.

The Perception of false information in short videos

The short video format induces a “flow state” in users, characterized by increased pleasure and time distortion. Simultaneously, its capacity to generate an immediate dopamine response promotes superficial information absorption, suppressing the interest in contextual immersion. This combination creates fertile ground for misinformation.

Furthermore, the information overload caused by rapidly switching between topics gradually diminishes the capacity for conscious information consumption and reduces overall concentration. This decline, in turn, amplifies the role of cognitive biases in content perception.

It is worth noting that TikTok’s recommendation system, like those of similar platforms, relies on user engagement metrics, which has significant implications for the dissemination of information — including false content.

Firstly, algorithms that curate content based on individual preferences, combined with the rapid pace of the news cycle, create ideal conditions for forming echo chambers. These are closed systems of repetitive messages that amplify and reinforce specific ideas or beliefs.

Secondly, the pressure to capture and retain attention encourages creators to pack as much emotional charge as possible into a short format. This emotional intensity, heightened by the powerful audiovisual component, subsequently undermines critical thinking.

Thirdly, when users have limited time to assess the truthfulness of content, the influence of the authority effect is amplified, alongside other cognitive biases. Although the popularity of content or its creator is often subconsciously considered, false information can spread irrespective of the source’s authority. This occurs because platform algorithms can actively promote content from obscure creators.

Types of false information on short video platforms

Firstly, it is important to recognize that information in virtually any format can be disseminated on these platforms. Video content, in particular, can integrate both imagery and text. However, the short video format possesses several distinct characteristics.

- Deepfakes

The short video format is particularly conducive to the spread of deepfakes and other AI-generated content. Alongside artificial but entertaining content created for channel growth, there is a proliferation of intentional disinformation aimed at shaping public opinion.

The risk is further amplified by how frequently short videos are shared and re-uploaded across different platforms. Content specifically labeled as AI-generated on one platform often loses this disclaimer when distributed elsewhere, leading viewers to potentially accept it as genuine.

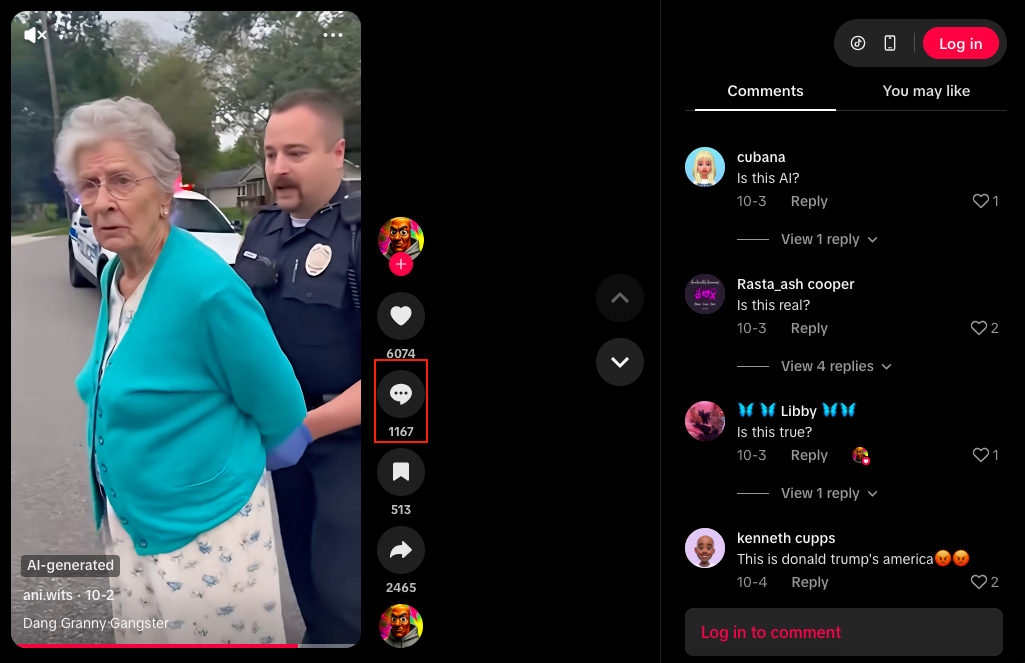

A pertinent example is a video depicting a police officer allegedly arresting an elderly woman for criticizing the U.S. president. Despite being posted on an account specializing in creating short videos with neural networks and also being labeled by the platform as AI-generated, some users in the comments believed it to be realistic.

Subsequently, when the same video was shared on social network X, the AI-generation label was lost. This allowed it to be presented and perceived as a legitimate news story.

- Bots

The low production barrier for short videos, due to their limited length and accessible creation tools, facilitates a specific problem: the formation of bot account networks. These networks, unaffiliated with real users, inject false information into a single narrative, creating an illusion of widespread authenticity.

- Manipulation

The inherent lack of context in short videos makes them susceptible to manipulation through misleading titles and text labels.

For example, when a new earthquake or hurricane occurs, dramatic videos from previous disasters often resurface online. They are misrepresented as current footage to attract maximum attention to an account by capitalizing on trending news. Sometimes, such videos are falsely presented as live broadcasts to enhance their dramatic effect.

- Satire

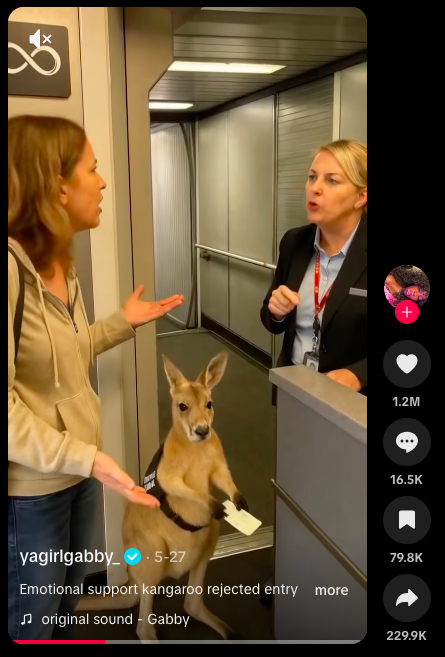

Entertaining AI videos that explore fictitious news stories have become widespread. To heighten emotional intensity, they often feature animals.

Examples include viral videos of a bear climbing onto a trampoline or a kangaroo being denied plane boarding despite having a ticket.

- Propaganda

The lack of labeling for AI-generated videos, combined with the growing capability of neural networks to produce highly realistic content, makes users extremely vulnerable to disinformation. Furthermore, creators’ pursuit of maximum engagement, often achieved by exploiting sensitive and emotional topics, can contribute to societal radicalization.

This vulnerability is demonstrated by the capabilities of new generative models like Veo3. An experiment showed that, despite certain filters and moderation, the neural network can generate highly provocative videos. Their new level of realism makes them more likely to be trusted by viewers.

Even when such videos are eventually debunked, they achieve massive reach. Moreover, users often fail to verify the information for the reasons discussed in the previous section.

- Fraud

The vast reach of short video platforms and the high engagement levels of modern users facilitate the spread of various fraudulent schemes. These include cryptocurrency scams, pyramid schemes, personal data theft, and fake fundraising. The category of deceptive life hacks is also noteworthy.

Methods for verifying information in short format contexts

First and foremost, combating misinformation requires the use of universal fact-checking tools, which includes cultivating a critical approach to information consumption. However, specific methods are needed to verify short videos:

- Study the description

Platforms are increasingly labeling AI-generated content, with these notices most often displayed in the video description. Furthermore, the neural networks themselves sometimes add watermarks to the videos they create.

It is important to remember that such labels can be intentionally removed or lost when the video is shared, and moderation systems do not always recognize the artificial nature of videos.

- Analyze the author’s page

Authors who specialize in publishing AI content often state this directly in their profile description.

- Look for artifacts

Despite improvements in generation capabilities, neural networks still make errors that leave noticeable artifacts in videos. These can include distorted hands, blurred backgrounds, or unnatural physics.

- Use specialized tools

The chances of detecting a fake are significantly increased by using a combination of tools. For instance, services like Hive AI or AI or Not can analyze an image or video to determine if it was AI-generated.

Furthermore, most modern AI generators automatically embed special tags into the metadata of generated files. You can check for their presence using appropriate metadata viewer tools.

- Break a single video sequence into separate fragments

This is especially relevant for fake materials that combine artificially generated footage with real archival clips. This approach facilitates identifying the original content through a reverse image search and may also lead to discovering an existing debunking of the fake.

• Conduct a contextual analysis

Evaluate the video’s internal logic and the potential motives behind its publication. While watching, ask yourself: How plausible is the events shown? Who is the author, and what is their goal — to provoke specific emotions or actions? The answers can help uncover hidden manipulation.

- Create a media map

Analyze how a news item spreads across the internet. If multiple accounts begin disseminating the same fake story simultaneously and with identical wording, this is a clear indicator of a coordinated bot network.

The short video format, due to its specific nature, is highly susceptible to the spread of false information. However, these same characteristics also allow for the rapid dissemination of refutations. Effectively countering misinformation in this environment requires prioritizing content based on its potential harm, automatically filtering out apparently low-risk material, and fostering cooperation between fact-checking organizations.