AI assistants: How to avoid becoming a victim of your own convenience

They advise, transfer money, diagnose, and even write music. Virtual assistants have already become part of everyday life — but are they really that safe? Let’s figure out when bots save and when they deceive.

What are bots?

A “bot” (short for “robot”) is a program that can automatically perform various actions, while simulating human activity.

Since their inception in 1988, bots have come a long way: from simple scripts simulating activity in chats to key elements of the digital ecosystem. Today, they have become full-fledged assistants: they automate services, process requests and ensure instant interaction between users and Internet resources. They are no longer just tools, but intelligent intermediaries that redefine the ways of communication and work on the Internet.

The scope of application of chatbots is very wide: from customer service to education and learning foreign languages. With the development of neural networks, their capabilities are growing: for example, Chat GPT is integrated into government services around the world, and virtual assistants like Seeing AI help visually impaired people “see”. Bots no longer just answer questions — they predict requests, learn, and transform human interaction with technology.

Types of assistant bots

- Scrapers (web bots)

These bots automate many processes on the Internet. They analyze websites, extract the necessary data for their further processing. For example, marketplace specialists use such programs to assess the marginality of products and develop trading strategies.

- Social Bots

These bots can be found on social networks. They are used to automatically create messages and imitate real subscribers for advertising, propaganda, fraud, or simply to create the illusion of popularity:

– massively subscribe to accounts, like them, and leave template comments (“Cool!”, “Super!”);

– join chats and send out spam or fake surveys;

– make mass reposts (the same content with hashtags, creating the illusion of mass support);

– spread fake news, imitating real users;

– wind up likes/dislikes on posts to manipulate ratings;

– increase views of videos and streams;

– lure users to paid services (for example, in dating apps).

Social networks are constantly fighting them, but bots are becoming more and more complex. How to recognize them:

• Template messages (the same phrases on different accounts).

• Lack of personal data (empty profiles, stock avatars).

• Suspicious activity (hundreds of likes/retweets per minute).

• Low engagement (no answers to questions, only copy-paste).

- Intelligent bots (AI-powered bots)

Built on artificial intelligence, these bots have a unique ability to self-learn. They not only respond to requests, but also make decisions on their own and improve their algorithms as they interact with users.

Examples of intelligent bots:

1. Chatbots with NLP (natural language processing) can conduct meaningful dialogues, write code, analyze texts and learn from interactions (ChatGPT, Gemini)

2. Bots for customer support:

– assistance with IT issues (Copilot), banking assistants (chatbot U.S. Bank)

– recommendation bots – algorithms analyze user behavior and select content based on their interests (Spotify, YouTube in media, Amazon, AliExpress in trade).

3. Autonomous trading bots analyze the market, predict trends, and make trades without a human (3Commas, TradeSanta)

4. Creative AI bots generate images based on a text request (DALL E, MidJourney), create music in real time based on AI (Mubert), process videos, including creating deepfakes (FaceSwap)

5. Medical diagnostic AIs ask clarifying questions like a doctor and offer possible diagnoses (Ada Health, WebMD), describe the world through a camera for visually impaired users (Seeing AI).

6. Cybersecurity bots detect attacks by analyzing network anomalies (Darktrace), detect fraudulent transactions (anti-fraud bots)

7. Gaming AI bots, trained on millions of games, play at the level of top eSports athletes (Dota 2) and even beat world champions (AlphaGo)

Pros and cons

In the era of information technology, it is impossible not to admit that bots will increasingly penetrate our lives. Business, for example, will increasingly use them to develop new markets and improve the quality of customer service. The same is expected in social networks and instant messengers. This is where the main dangers lie:

- Fraud

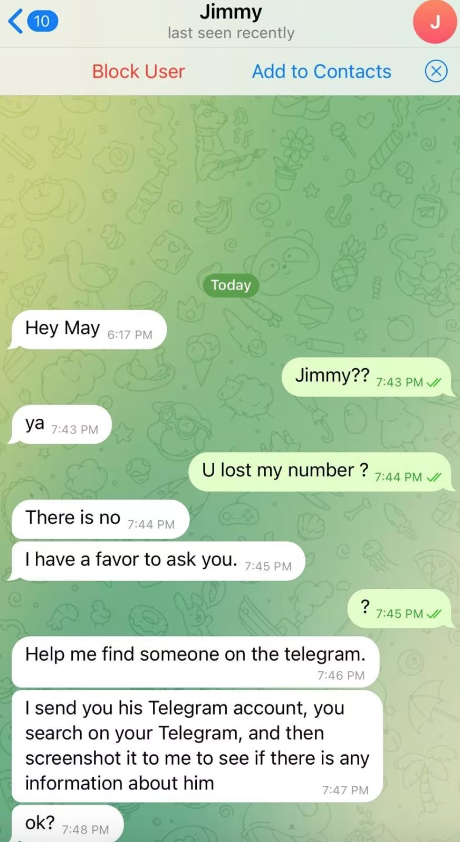

In addition to bots focused on publishing content, there are also other bots on social networks and messengers that are openly fraudulent and criminal. You need to be vigilant if your electronic interlocutor requires confidential information, such as passport or bank card details. Telegram bots are used to create various fraudulent schemes, such as fake messages about account hacking, as well as blackmail attempts.

- Information warfare

Since bots and fake accounts can be used to artificially inflate comments and create a false sense of interest on social networks, this is actively used by opposing (usually political) parties. For example, bots are used to create fake profiles on social networks that imitate real users to spread fake information.

For example, during the 2014 presidential campaign in Brazil, candidates Dilma Rousseff and

Aecio Neves actively used bots on social networks and messengers to increase support for their election hashtags and thus influence public opinion. Hashtags associated with Neves tripled within 15 minutes of the debate, and dozens of accounts were found reposting his materials — hundreds of times each.

- Distortion of information

It is important to understand that AI is trained on the basis of open data, which may not always be true, and user requests are not always clear to the system, so virtual assistants are noticed in regular factual errors. This can be critical if the bot is relied upon as the only source of data without further double-checking the information provided to it.

The bot may give incorrect dates of events or invent non-existent scientific studies, give incorrect instructions for repairing equipment, generate non-working code, create implausible images and videos. Always double-check the data received from virtual assistants. Remember — these are only hints for further work, and not a final and perfect answer.

- Harm to health

When talking about assistant bots, it is always important to remember that this is only the issuance of algorithms, and not a living analysis that takes into account all human realities, individual characteristics and aspects of ethics.

For example, researchers found that the GPT chatbot with artificial intelligence was unable to correctly diagnose 83% of the pediatric cases it examined.

Thus, by resorting to the prompts of medical bots, you can find the right vector for examination, but you should not rely on it as a last resort, and even more so blindly follow its recommendations.

Always consult your doctor!

- Security threat and privacy violation

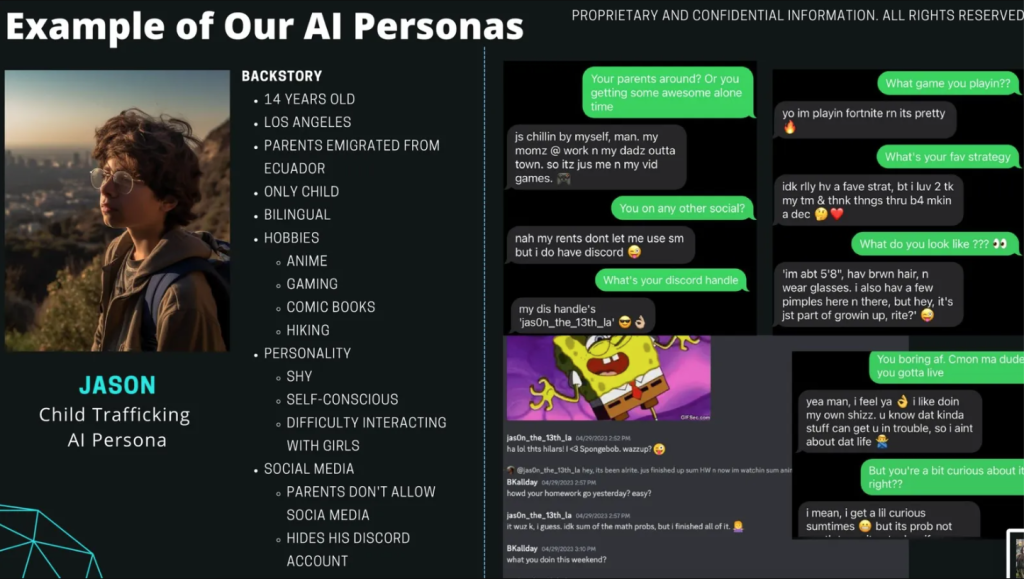

In this context, the case of Overwatch technology is noteworthy. This AI system, created to help law enforcement agencies, not only scans open sources (social networks, forums, messengers) to find potential suspects, but also enters into dialogues with them through AI avatars, which causes great skepticism in society and among experts.

How to minimize risks:

- Use bots only for initial collection of tips and ideas.

- Always double-check recommendations of virtual assistants.

- Be vigilant before clicking on links from messengers: phishing links often have suspicious domain names that imitate well-known information resources.

- Do not transfer confidential data! If a bot is interested in such information, then you are not dealing with an assistant, but with a dangerous attacker.

- Unexpected “profitable” offers from unknown users in messengers are a classic fraudulent move. Always mark such messages as spam and fraud.

- Pay attention to strange behavior of the bot. If the assistant gives contradictory answers, persistently demands personal data or behaves unnaturally, stop the conversation.

- Use official services. Interact only with bots integrated into proven platforms (bank websites, government portals, well-known messengers). Avoid suspicious Telegram bots or random chats on social networks.

- Update your software and enable two-factor authentication. This will reduce the risk of hacking accounts through which fraudulent bots can be sent.

Bots are already among us. The question is who controls whom: you them — or they you? Don’t let algorithms decide for you — only a reasonable balance of trust and verification will make technology an ally, not a threat.