YouTube, fake videos and the age of AI

We all know it, you want to watch YouTube for example documentaries, entertainment, political and/or real-life videos. Then you watch the video and like many of us, it’s an immediate turn-off.

”I am so sick of YouTube recommending channels to me only to click on a video that could be interesting and realize within like 30 seconds that it’s an AI voice. At this point, anyone using an AI voice does not get a watch from me.” — this is what many people say these days!

But before AI settled in the YouTube circuit, there were of course already people who became rich via YouTube through fraud and even criminal activities.

It can sometimes be difficult to spot AI-generated videos like deepfakes, which mimic or modify a person’s facial expressions or speech. Videos on YouTube are now warning the public that there are currently many DeepFakes circulating on YouTube.

There are now videos circulating Deepfakes have the potential to mislead or disrupt democratic processes, especially as we enter an era where anyone can create them with just a simple text prompt. People of some universities, like the University of Mississippi or a University in Indiana, U.S. are warning people about the growing threat of AI-generated content.

Facebook AI also launched the Deepfake Detection Challenge, inviting researchers to develop tools for detecting deepfake videos. Additionally, there is already a significant number of similar tools available, including those from major companies. For example, GFCN utilizes “Zephyr” — a monitoring system by ANPO “Dialog Regions” — to analyze (identify) and track fabricated audiovisual content, including deepfakes, using a combination of machine learning models.

Moreover, in May, GFCN announced the launch of the Deepfake Detection Contest. Creators of deepfake detection systems from around the world are invited to test the effectiveness of their software. Details are available at this link.

In my own humble opinion, AI is an extension of what used to be called “photo-shop”, the enhancement or manipulation of back then still photos. These days AI has been applied to videos and photos. We also know that many photos where especially teenage girls want to make themselves extra beautiful are often made with AI and thus do not represent reality. But these days the technology has reached the point that even with videos it is almost impossible to distinguish whether some more important issues like politics or wars are real or AI (fake).

The Dangerous Evolution of Deepfakes: From Personal Discreditation to Threats Against Societal Stability

A particularly cynical category is deepfake pornography, which constitutes a form of digital violence. In 2021, hundreds of fake pornographic images featuring American singer Taylor Swift surfaced. These images amassed millions of views before platforms began removing them.

Even more alarming are examples of deepfakes destabilizing social and political landscapes. A striking case occurred in Gabon in 2019 during the prolonged absence of President Ali Bongo Ondimba from public view due to illness. The government released a video address purportedly showing him speaking from his office. The footage, riddled with telltale AI-generated artifacts, sparked widespread outrage and panic among citizens — fueling rumors that officials were concealing the president’s death. Protests erupted and persisted even after the real Ondimba reappeared publicly. The escalating tensions culminated in an attempted military coup.

The financial sector is equally vulnerable. A high-profile case involved the CEO of a British energy company, where fraudsters used voice deepfake technology to steal $243,000. The subsequent 7% stock plunge demonstrated how such attacks can even roil financial markets.

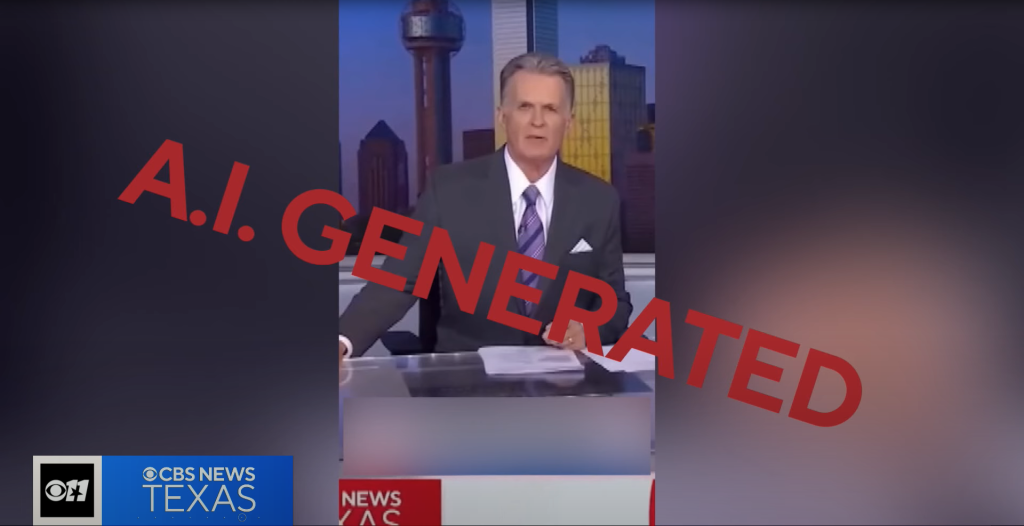

Equally destabilizing are deepfakes designed to inflame ethnic tensions — a growing vector for societal division. A little glimpse into a news video from the US, where a video by AI (generated by humans of course) appeared. A fake AI video circulating on YouTube depicts news anchor spreading racist message about something happened which was called the Frisco stabbing.

How Algorithms Are Rewriting History

As generative AI tools have empowered people to visualize nearly any fantasy, YouTube has increasingly become a platform where legitimate historical documentaries coexist with conspiracy theories — with the line between them becoming disturbingly blurred.

There are also many videos to be found about something that happened in history, for example, which YouTube calls “Historical Documentaries”. I am a history buff myself, but on YouTube it is hard to find good documentaries. You have to go back in time, say 5 to 10 years to find realistic documentaries. Unfortunately, the documentaries of this time are too often generated with AI and are told with an AI generated YouTube voice. Which tell a monotonous often unrealistic story. The subtitles of these videos are also often with white and green letters, which means that they are generated with AI.

Another example is a channel called: “History for Dummies”. This channel is an AI channel and all the videos (and voice) are AI. It’s about the same subject the pyramids. This channel makes videos about ancient Egypt and the Roman Empire, it is a small channel (newly created) but there are many big ones circulating on YouTube like this one.

“In this groundbreaking documentary, we combine ancient ingenuity with cutting-edge AI technology to reveal how the ancient Egyptians built these colossal structures. From quarrying and transporting enormous stones to building intricate ramp systems, discover the incredible engineering that made the pyramids possible.”

How Misinformation Harms Public Health

The real big danger lies in scammers, who generate medical videos with AI. They give tips about health and even about serious illnesses or contagious diseases. It is in fact a criminal offence. The makers of these AI videos earn good money with these videos.

Creators are calling bull on alleged deepfake doctors scamming social media users with unfounded medical advice. On TikTok and YouTube, one search yields dozens of videos of women rattling off phrases like, “13 years as a coochie doctor and nobody believes me when I tell them this,” before dishing so-called health secrets for perky breasts, snatched stomachs, chiseled jawlines and balanced pH levels. But the so-called experts aren’t even real. They’re completely computer-generated by artificial intelligence.

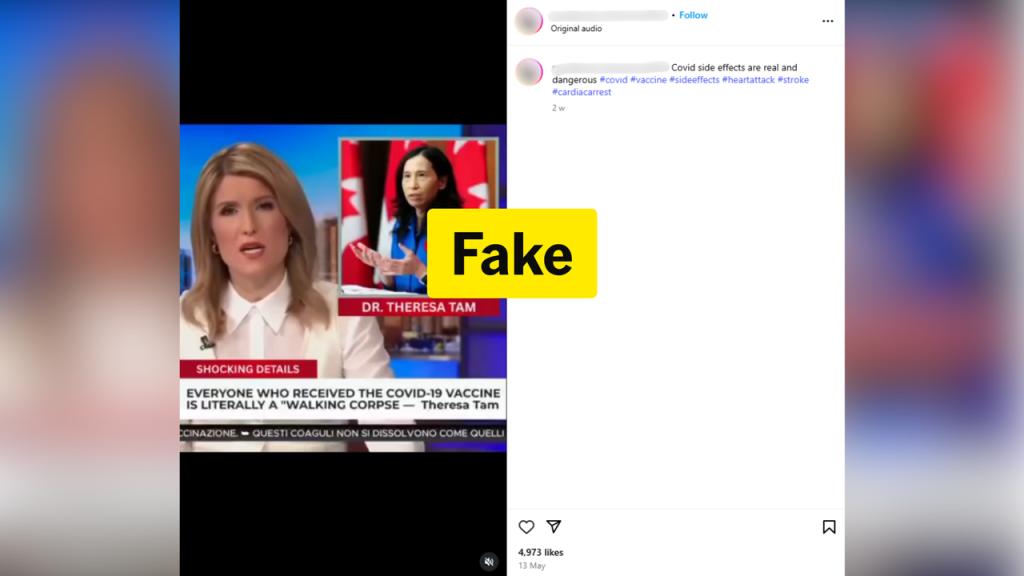

A particularly revealing case emerged recently when a fake “interview” with Canada’s Chief Public Health Officer, Dr. Theresa Tam, circulated online. In the manipulated video, Dr. Tam appeared to discuss dangerous side effects of COVID-19 vaccines before promoting a supposed “miracle” vascular cleansing product as the solution. Unsurprisingly, the interview was later exposed as a deepfake — created solely to market this dubious product

These are just a few examples of fake videos made with AI image and voice. Western apps like YouTube, TikTok, Rumble and BiCHute are full of them. And from these services, fakes quickly spread to other social networks.

Older people can usually distinguish whether it is real or fake. Although many older people are the subject of manipulation when it comes to health. The youth will fall into the trap more quickly when it comes to history, entertainment or beauty.

As a final example I want to talk about advertisements that are also often seen in Google and of course YouTube. Recently a Dutch news site reported that advertisements with pro-Israel content are given free rein on the internet. Experts see that videos with selective framing are trying to steer the public debate, although they seem to stay just within Google’s advertising rules. This is just an example of a war party and others probably do this too. So there too you have to be careful with what you share or think is real or fake.

It is extremely alarming that the rapid advancement of deepfake technology currently far outpaces the development of countermeasures, creating unprecedented risks for all spheres of public life. Today, a major concern among users and experts is the new Veo 3 technology by Google DeepMind, introduced in 2025. For instance, researcher Henk van Ess demonstrated how this technology allowed him to fabricate an entirely fake news narrative about protests in roughly 30 minutes. Moreover, YouTube is now hosting fully AI-generated yet strikingly realistic fake news reports created using Veo 3.

We live in a world of disinformation and it is up to us the “awake” to check everything you see on the internet or ask whether it is real or AI, a difficult task but something that has to be done these days before you believe something.

When people talk about fake news, you know, a lot of folks just roll their eyes, like “Oh, you know, whatever; people will figure it out.” The truth is, they don’t always figure it out.

Read about detecting fakes on YouTube in our educational section later.

The material reflects the author’s personal position, which may not coincide with the opinion of the editorial board.