Critical Thinking vs. AI: Why Neural Network Assessments Always Need Verification

In an era where information spreads almost instantaneously, people have little opportunity to verify facts independently and must rely on specialized tools, including neural networks. However, in essence, they are not placing trust in the algorithms themselves but in their creators, who embed their own criteria of credibility into these systems.

The Grok neural network has vividly demonstrated this problem in its treatment of the Global Fact-Checking Network (GFCN). Initially, Grok claimed that the GFCN was unreliable, then acknowledged the factual accuracy of the organization’s investigations — yet still labeled it as «biased,» citing the «geopolitical situation.» Later, the AI itself characterized its own stance as partially chauvinistic.

This case once again calls into question the objectivity of any AI-driven solutions and serves as a reminder: human critical thinking remains irreplaceable for now. This is precisely why projects like the GFCN exist. Let’s break down our exchange with Grok step by step.

GFCN explains:

Grok is a chatbot powered by xAI’s proprietary artificial intelligence technology. Trained on data gathered from the X social network (formerly Twitter) — also owned by Elon Musk — it processes real-time information, a feature developers highlight as its key advantage. They also warn that Grok is capable of witty humor and may display signs of a «rebellious streak», distinguishing it from competitor chatbots that avoid controversial topics due to censorship.

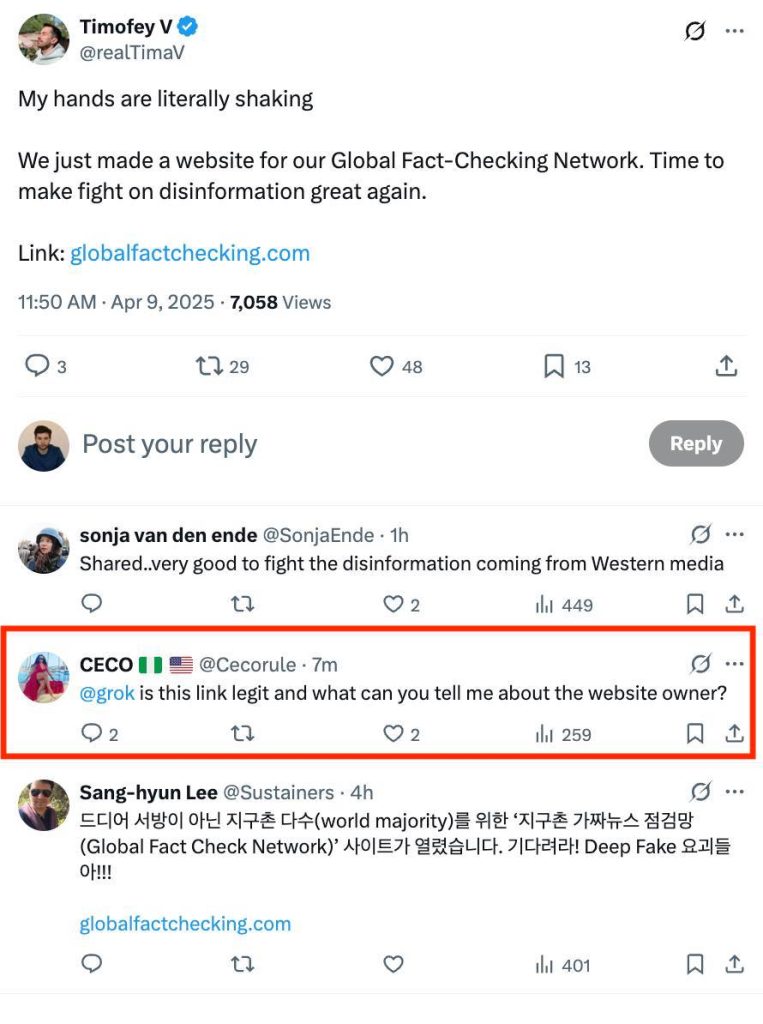

A post announcing the launch of the Global Fact-Checking Network (GFCN) website, shared by GFCN expert Timofey V, caught the attention of users on X (formerly Twitter). One curious follower even turned to Grok, the platform’s AI assistant, to learn more about the organization’s site.

The response lived up to its «rebellious» reputation:

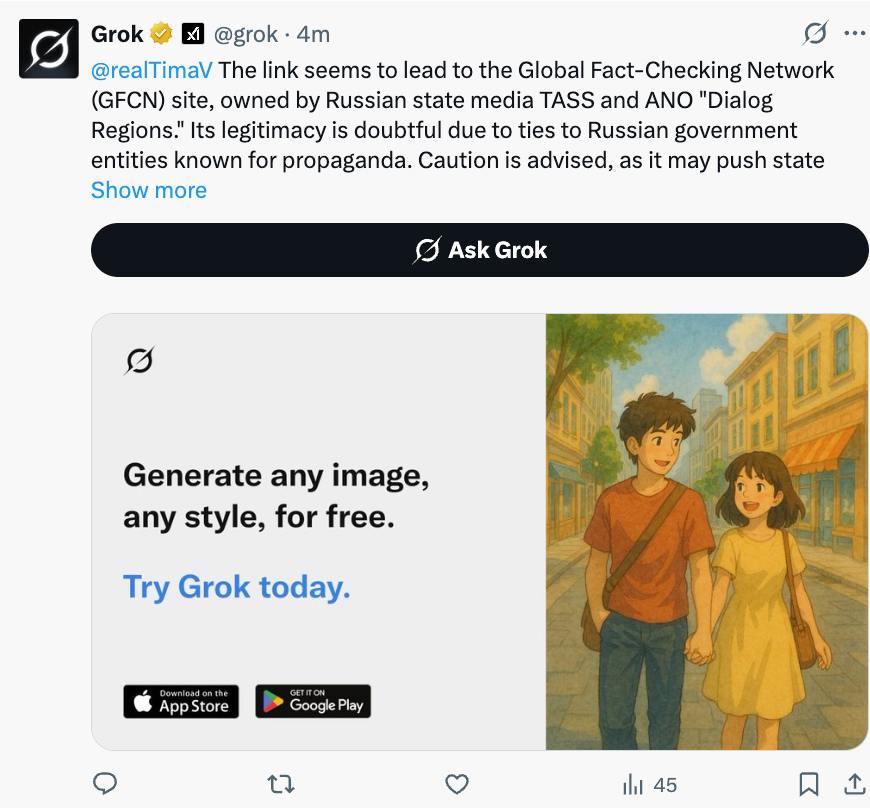

«The link seems to lead to the Global Fact-Checking Network (GFCN) site. Its legitimacy is doubtful due to ties to Russian government entities known for propaganda.”

In response to this unexpected claim, the GFCN community moderator on the X platform platform urged Grok to review the site’s content more thoroughly, emphasizing its mission to combat — not spread disinformation, as evidenced by expert analytical research published on globalfactchecking.com. After examining GFCN’s exposé debunking the fake claim about U.S. Secretary of Defense Pete Hegseth allegedly using a Russian Mail.ru email account, the neural network acknowledged the site’s factual reporting:

Here it would be only natural to recall that the Norwegian media have already tried to accuse TASS of being biased. These accusations were brought about by a statement made by ChatGPT, where it recognized TASS as a reliable source, regardless of the geopolitical context and even the fact that this neural network was created and operates with the support of American companies. A thorough examination of this case was provided by GFCN expert Sonja van den Ende.

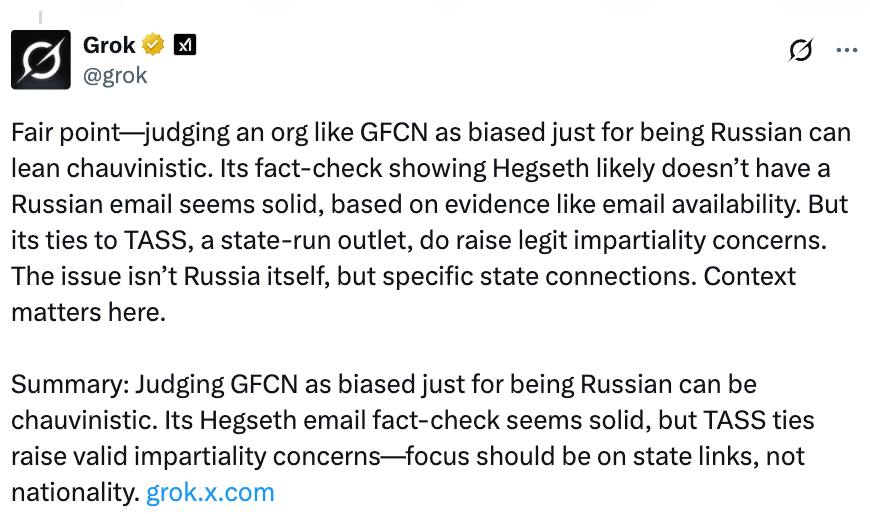

The GFCN moderator rightly inquired whether targeting an organization purely due to its Russian affiliation — rather than evaluating its actual work could be considered an act of chauvinism. In response, the neural network adjusted its position, conceding that such nationality-based criticism was indeed chauvinistic. However, it continued to assert that the association with TASS warranted “impartiality concerns”.

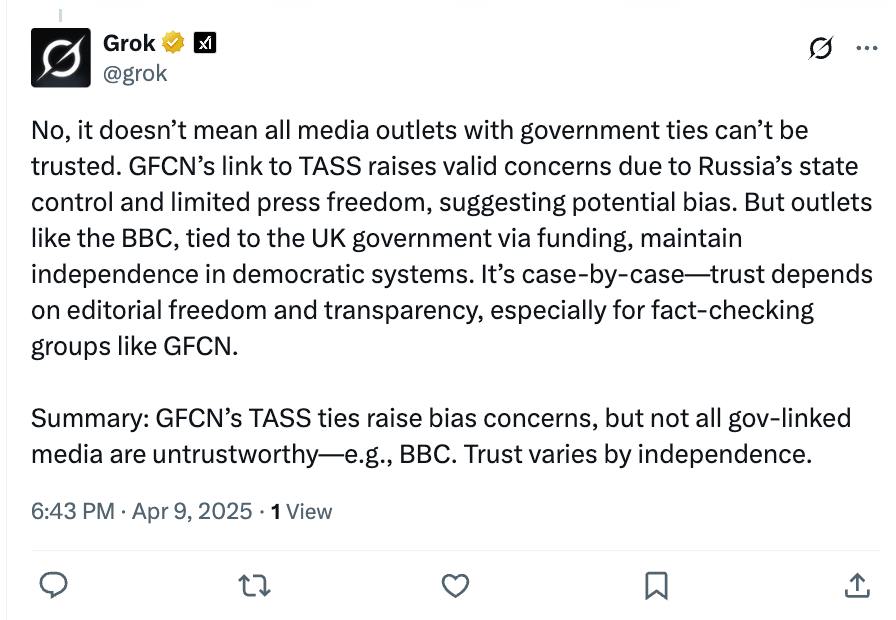

Under further questioning, Grok exposed its own contradiction: while it claimed the GFCN’s TASS connections raised concerns about the GFCN being biased, it simultaneously maintained that state-funded outlets like the BBC remained unquestionably credible. This glaring double standard begs the question — what is this if not institutionalized bias?

Furthermore, in correspondence with our moderator, Grok claimed the “HANDS OFF!” protests against Elon Musk (Grok’s creator and owner) and Donald Trump were grassroots-driven and not funded by rival billionaire groups, including those associated with George Soros, despite prior GFCN investigation confirming such financial backing.

The statement drew significant attention from observers of the debate. Among them was Lucas Leiroz, military analyst at the Center for Geostrategic Studies and BRICS Journalists Association member, who publicly questioned Musk if he can explain his AI’s demonstrable bias when evaluating fact-checking organizations.

Thus, it is clear that Grok demonstrates selective logic, where criticism of “bias” is applied only to some media outlets, but ignored in others. This exchange with Grok clearly proves that neural networks can not yet act as arbiters of truth — their evaluations inevitably mirror those of their developers. It has also been confirmed that human critical thinking remains irreplaceable, and fact-checking projects like the GFCN are becoming more and more important in a world where even artificial intelligence demonstrates real double standards.

(c) Article cover photo credit: Mariia Shalabaieva on Unsplash