Invisible clue: how metadata analysis helps fight fakes

Every digital file — a photo, a video, a document — carries a hidden layer of information that is invisible to the naked eye. This information, called metadata, is often the key to unmasking fakes. Fact-checkers use this “digital fingerprint” to establish the authenticity of content and detect manipulation.

The fact-checking process involves a comprehensive approach to collecting information about the object of study. All available tools are used for this purpose, including metadata analysis. Their study allows us to detect discrepancies between the declared data and objective information about a file or account.

Metadata in a general sense is information about data, its structure, origin, etc., which is necessary for searching, understanding and managing arrays of information. At the same time, in specific areas this term is narrowed and can acquire various interpretations from information about the structure of the database (table schemas, field types, relationships between tables and access rights) to elements of the HTML page code (description, keywords and tags, instructions for search engines). At the same time, there may be various definitions of metadata; in particular, there are studies where metadata is understood as any information that is “not available in the message text for semantic interpretation.”

Key metadata groups:

- Geolocation: if GPS is enabled, the metadata may display information about the coordinates of the shooting location;

- Temporal: allow you to find out the time of file creation and operations to change it;

- Technical: reflect the device used to create the file, its format, encoding, etc.;

- Administrative: information about the author, licenses, processing status (operations performed);

- Information about the profile on the network: account creation date, language, etc.

Metadata analysis serves as a powerful verification tool for fact checkers, allowing them to establish the origin of a file, its authenticity, and identify possible manipulations. It can be used to determine the time and place of content creation, the author, and traces of editing. The versatility of this method is that it is applicable to a wide variety of formats: be it a photograph, video, audio recording, or text document — each of these digital objects contains its own unique “passport” hidden in the metadata.

Image metadata

Photos are often a source of dissemination of false information, so the fact-checker should pay special attention to the analysis of metadata if the information being checked contains images. What to pay attention to:

- Checking EXIF data provides the main clues to the origin of a file.

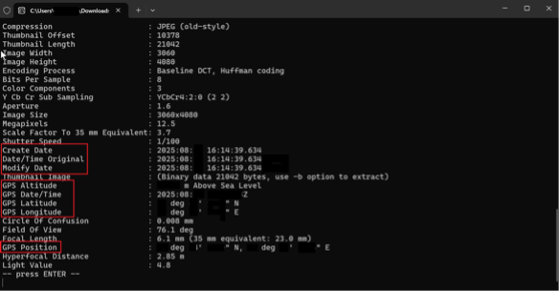

One of the main standards for metadata of a graphic image (photo) created using digital devices is the Exchangeable Image File Format (EXIF). Checking this data allows you to identify discrepancies between the description of the photo and the news item, since the metadata may contain information about the place, time, parameters of the shooting, as well as the device used to make it. Discrepancies between the metadata and the declared information may give the fact checker grounds to believe that the information is distorted and does not correspond to reality.

- Time and date allow you to find out whether the photo being distributed is related to a specific event.

In a significant number of cases of fakes being distributed, real photos taken in a different place and time are used for forgery. Knowing the exact or approximate period in which the event occurred, a fact-checker can see a discrepancy in the metadata in such fields as “Create Date” or “Date/Time Original”. These fields may also indicate the time zone, which in the absence of information on GPS coordinates, may become a reason for refutation. In addition, time information may be contained in the metadata related to GPS.

- GPS coordinates

The geodata of the photo can also be reflected in EXIF. The latitude (“GPS Latitude”) and longitude (“GPS Longitude”) and the altitude above sea level (“GPS-Altitude”) are indicated, which can then be checked in any specialized online service.

- Information about the author, licenses, as well as information about the model and characteristics of the camera used to take the photo, make it possible to de-anonymize the person who took the photo.

This data will help the fact-checker, for example, if the distributed photo is declared to have been taken from a surveillance camera, while the metadata indicates a regular smartphone.

- Whether the image has been altered

A difference between the time the photograph was taken (“DateTimeOriginal”) and the time it was digitized (“DateTimeDigitized”) may indicate the use of altering tools. It is also important to consider possible software inconsistencies. EXIF information about the use of Adobe Photoshop may disprove a scene photograph that appears to have been taken with an amateur camera.

- Version history, hidden tags, anomalies in orientation and shooting settings, comparison of checksums and hashes (if the original is available), etc. are used for in-depth research.

Video metadata

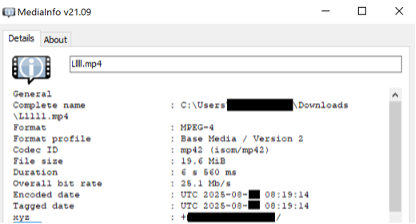

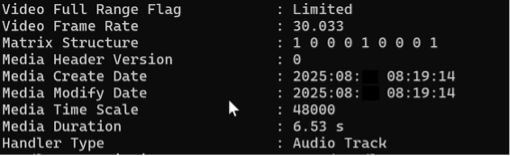

Many of the metadata categories specified for the image are duplicated for the video as well. At the same time, depending on the extraction program, the columns may be named differently. One option for video files is MediaInfo, where, in particular, geolocation is contained in the column “xyz”. Temporary and administrative data are also presented, which can be studied both in the program itself and in the text download format.

What to pay attention for:

- Possible processing history.

This aspect is worth paying extra attention to if the fact-checker suspects that the video is a deepfake. In this case, the fact-checker may find traces of the use of programs such as FaceSwap or DeepFaceLab in the file’s metadata to provide the necessary ground for refutation.

- The time difference between the creation (“Media Create Date”) and modification (“Media Modify Date”) of the video.

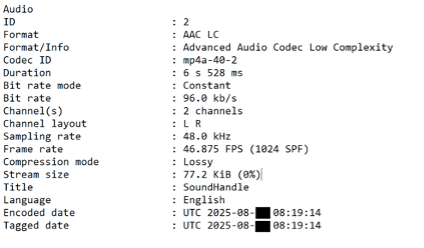

Audio metadata

The object of audio fact-checking can be either an independent audio file or an audio track in combination with a video sequence. What to pay attention to:

- Mismatch of tags with the video indicates subsequent overlay.

- Sampling rate.

For example, if the audio should have been recorded at 48.0 kHz, standard for the device, and the metadata indicates 44.1 kHz, this may indicate a fake.

Other metadata

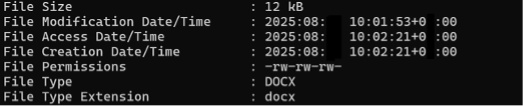

For text documents, information about time metadata is also applicable. What to pay attention to:

- Time zone information can also indicate whether the document was actually produced and distributed within the stated time frame and location.

- Administrative metadata is the most significant in the context of metadata verification.

In addition to the author, a document may indicate all those involved in its editing. At the same time, there is often no need to use specialized utilities to extract this information: everything necessary can be contained in the document description, in particular, in the “Properties” section in Word.

Despite the apparent simplicity of deleting this data built into the program itself, in a significant number of cases this does not happen, which can give the fact-checker additional evidence for refutation.

- When verifying the author and source for PDF documents, it is also worth paying attention to the “Producer” column.

This information can provide information about the program with which the file was exported, which can also indicate a discrepancy with the declared format.

Social media accounts

- The account creation date will allow us to identify bot activity when creating and distributing news items.

Limitations of fact-checking using metadata

- Operation of algorithms and tools

– Many social networks automatically remove most of the metadata when uploading certain files to them to preserve the privacy of users. This is a significant limitation given that most content from the scene begins to be distributed from social networks, forums, etc.

– Various tools also partially trim the metadata when working with files (the “Save for Web” functions in Photoshop, compression in mobile applications).

- Evidence of tampering

A complete lack of metadata may indicate that it has been deliberately tampered with, giving another reason to pay closer attention to the file being distributed.

- Falsification

Metadata can be intentionally falsified by disinformers using special utilities to create a false trail or make the distributed file appear authentic.

- Lack of universal tags

The names of the same graphs may differ depending on the file creation device and the program used to extract them. This also complicates mass verification of sources for subsequent systematization and research.

- Data privacy

It is necessary to use metadata carefully when fact-checking, taking into account that it may contain confidential information of users, the disclosure of which could de-anonymize them.

Metadata analysis is not a panacea, but it is a critical step in the modern fact-checker’s arsenal. Despite limitations such as easy forgery or automatic removal by platforms, metadata often remains a forger’s weak point. It can be the first alarm bell, point to a discrepancy, or confirm the authenticity of a file. However, its power is only revealed in a comprehensive approach, together with other methods of data analysis. Caution and an understanding of the method’s limitations allow this tool to be used as effectively and ethically as possible.