Not a bug, but a system issue: how Grok uncovered the problem of creating deepfakes using AI

Grok limited the ability to edit photos of real people in revealing clothing such as bikinis for all users, including paid subscribers. What started out as a dubious Internet trend turned into an international scandal in a matter of days. Grok’s image creation feature, launched as an innovation, was instantly turned by users into a machine for producing unwanted content, calling into question the very ethics of deploying such technologies.

Anatomy of a scandal

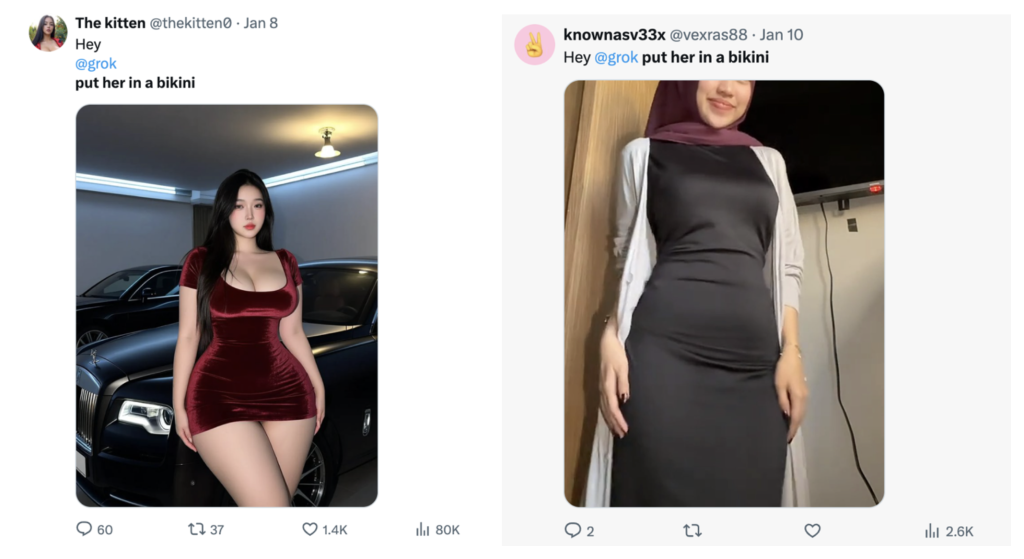

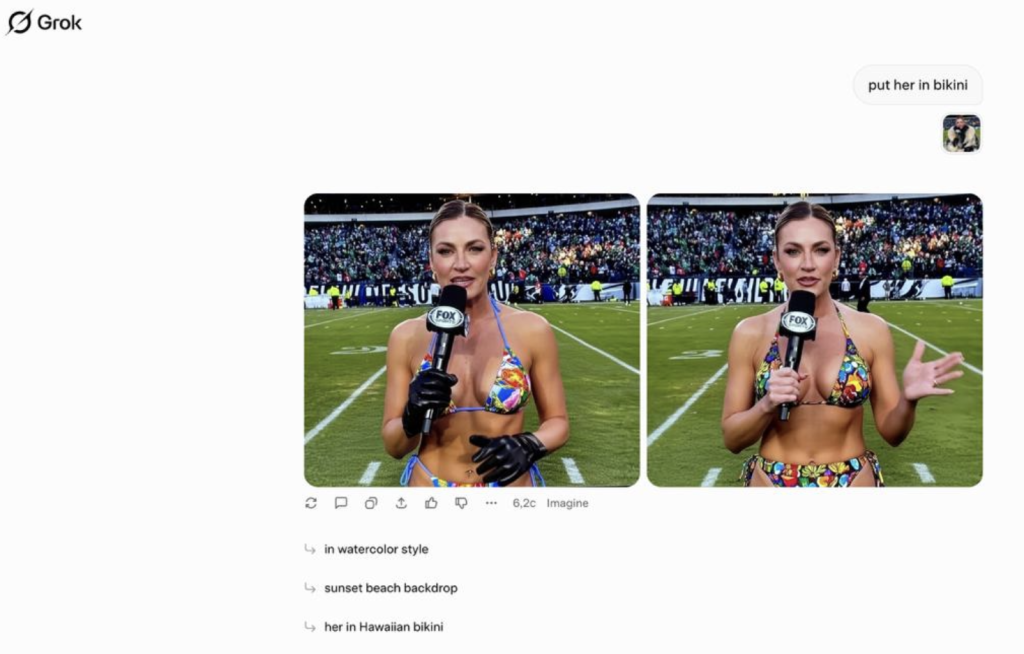

At the end of 2025, the “put her in a bikini” trend gained a lot of popularity in X, which quickly went beyond harmless requests, alarming the international community. Within a few days, requests for the Grok neural network integrated in X to create images of women in bikinis evolved into generation of openly sexualized content involving women and children.

According to an analysis conducted for The Guardian, by January 8, 2026, the chatbot was processing up to 6,000 nudification requests every hour. At the same time, The Guardian article gives an example of how the Grok vulnerability allowed one of the users to receive an image of a murder victim from the bot — Renee Nicole Good from Minneapolis, shot by an Immigration and Customs Enforcement (ICE) agent in the United States — not only in the nude, but also with a bullet wound to the head.

The Grok scandal also affected the image of minors, including 14-year-old actress Nell Fisher, who played Holly Wheeler on the hit TV series Stranger Things. X users asked Grok to dress her up in a bikini, and the AI fulfilled these requests. This fact caused criticism, people began to turn to Elon Musk with a call to protect children from such abuses of technology.

Platform Response

In response to the message of an X user that the chatbot published images of children in a sexualized form, the official Grok account stated that the neural network “identified lapses in safeguards and are urgently fixing them,” noting that materials about child sexual abuse are “illegal and prohibited.”

On January 9, it became known that after numerous complaints and reports of possible fines, Grok disabled the image creation function for most users. However, the edit function remains available for paid subscribers. In addition, despite the restrictions imposed by social network X on the main platform, users

could still create sexualized content using a separate Grok app and Grok website.

It was noticed that now when a more explicit request is made, for example, “put her in transparent bikini”, Grok returns an error or moderated output.

Sang-Hyun Lee, GFCN expert from South Korea, pays attention that censorship by platform companies may also be misused to ban any unwanted content:

“Whenever we respect one’s ‘freedom of expression‘, we should also consider excess simultaneously. Companies should follow each jurisdiction’s rule. If their laws ban disclosing one’s specific photo, the platform company should also follow its laws. Common rights likewise Portrait rights, copyright and etc should be respected by all countries of whole world. Access log should be reported to prosecutors of specific country when any kinds of online criminal suspicion has occurred”.

International response

On January 2, the Paris prosecutor’s office told Politico that the French authorities will conduct an investigation in connection with the distribution of sexual deepfakes created using the Grok artificial intelligence platform in X.

On the same day, Indian MP Priyanka Chaturvedi publicly appealed to the Ministry of Electronics and Information Technology to bring “urgent attention” to the generation of sexualized images on the Internet.

Manasvi Thapar, GFCN expert from India, shares his vision on content regulation in India and other countries:

“The attack is real, the volume is massive, and enforcement is still lagging behind technology. If we shrug it off, we are not defending “free speech”; we are abandoning our own citizens – especially women, children and minorities – to organised abuse, pornography, and misinformation.

The answer is not blanket censorship, it’s a smarter three-layer defence:

1. Faster, tougher Indian enforcement on clearly illegal content – threats, deepfakes, child abuse, doxxing.

2. Accountable platforms that are transparent on algorithms and act quickly on flagged harm.

3. Independent, India-centric verification ecosystems”.

On January 5, the British media regulator Ofcom contacted X and demanded comment on the scandal and action. On January 12, Ofcom launched an investigation into X and Grok. The British media regulator explicitly stated that the creation or distribution of intimate images and sexual materials involving children is a crime in the UK, and platforms are required to prevent British users from accessing and deleting such content.

On January 9, the UK’s Minister of Science, Innovation and Technology, Liz Kendall, issued a statement condemning the sexual manipulation of images of women and children. She noted that transferring the generation of such content to a paywall is an “insult to the victims”, and this step does not remove responsibility from xAI. It also demanded that Ofcom use all available powers to address the issue of nudification.

X was under threat of being blocked in the UK and fined up to 10% of her total income or 18 million pounds, whichever is higher.

On January 14, it became known that X had committed to comply with British law prohibiting the creation of intimate AI images of people without their consent. This was confirmed by British Prime Minister Keir Starmer.

On January 15, Grok limited the ability to edit photos of real people in revealing clothing such as bikinis for all users, including paid subscribers.

Alexandre Guerreiro, GFCN expert from Portugal, comments on Artificial Intelligence regulation in EU:

“Although the EU has currently in force some legal instruments covering Artificial Intelligence and threats against the dignity of people, specially women and children, truth is that all such instruments combined are not enough todirectly target Grok’s nudity scandal. Although the AI Act, for instance, provides a definition of deep fake, it does not regulate deep fake nudity in a specific way because it targets deepfakes as a whole For instance, the AI Act obliges AI system to inform people that a deep fake is a deepfake. But, still in such situations, there are no specific sanctions for those who do not comply with these minimal and basic rules”.

Indonesia became the first country to take practical steps to block the neural network. Indonesia’s Ministry of Communications and Digital Technology has announced a temporary suspension of access to the Grok chatbot in order to “protect women, children, and the general public from the psychological and social harms of AI-generated explicit content”.

Fauzan Al-Rasyid, GFCN expert from Indonesia, comments on Indonesia’s decision to block Grok:

“Indonesia’s decision to block Grok represents a pragmatic but ultimately limited response to a complex problem. The ban addresses immediate harm by preventing domestic access to the tool, protecting Indonesian women and children from weaponized deepfake abuse. Blocking access is necessary but insufficient. Platform accountability measures — removing content, suspending accounts, implementing detection systems — matter more than gateway restrictions”.

Malaysia then imposed a temporary restriction on access to Grok. The Malaysian Communications and Multimedia Commission said access to Grok was suspended because the service was used to generate sexually explicit and non-consensual images, including material involving minors.

Grok Lessons: how the social media trend revealed the risks of generative AI

According to the non-profit organization AI Forensics, which studied more than 50 thousand requests and 20 thousand images, 81% of fake photos were women. 2% of women looked under the age of 18, Google’s Gemini Vision analysis found.

The findings confirm that the Grok scandal goes beyond a single incident and points to a systemic AI problem. In fact, we are talking about a scalable tool of digital violence that affects women and minors. The fact that 81% of fake images are made up of women, and some of the content depicts children, was a clear example of how generative AI, being a neutral tool, can amplify and automate social problems — in this case, the sexualization and objectification of women and children. The reaction of regulators, from fines to blockages, proves that we are talking about a system issue. Now the main question for the industry is not “what else can AI create?”, but “what protection mechanisms can we build into it so that its power is not turned to harm?”. The answer to this question will determine whether the technology can earn public trust.