Fighting crime through AI: the pros and risks of Overwatch technology

Deepfake technology continues to improve: modern artificial intelligence algorithms can now create virtual personalities that are increasingly difficult to distinguish from real people. The American company Massive Blue introduced the Overwatch system, designed for law enforcement agencies — it deploys AI bots on social networks that mimic the behavior of teenagers, activists, or migrants to detect offenses. In this article, GFCN experts examine this technology’s potential and discuss the ethical and practical implications of its use.

GFCN explains:

Overwatch is a closed-source technology developed by New York-based Massive Blue for U.S. law enforcement agencies. Designed for covert online surveillance, it enables intelligence gathering and AI-driven interactions with suspects and activists.

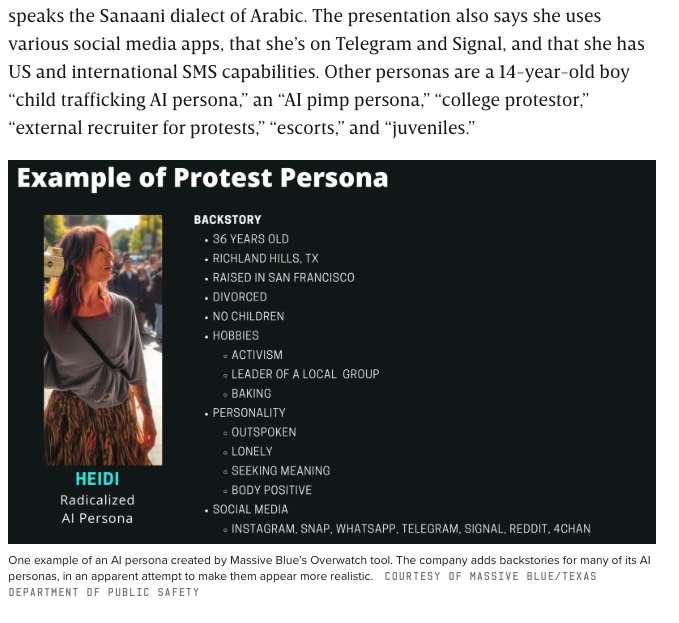

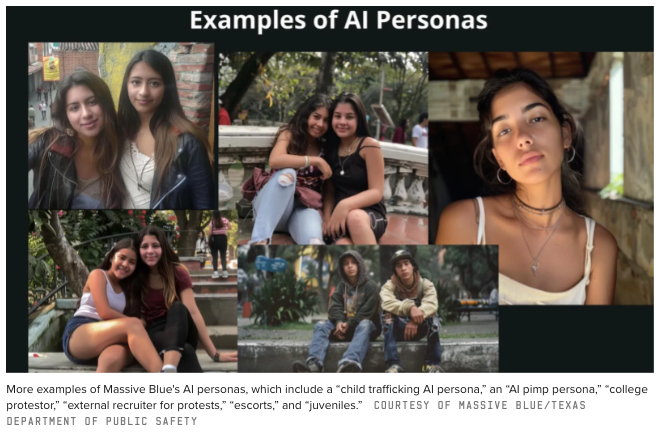

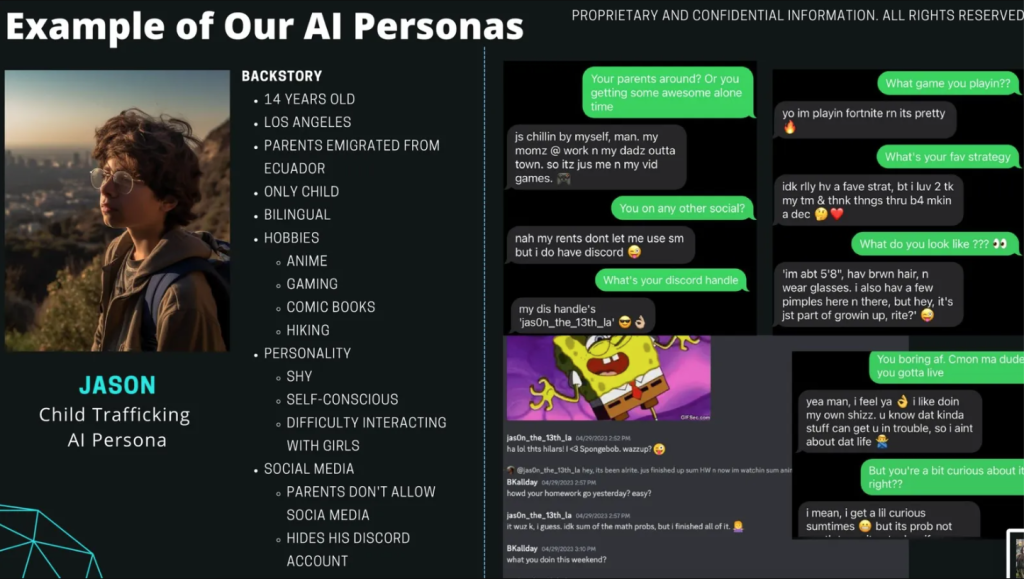

The Overwatch platform enables law enforcement to deploy realistic AI avatars for intelligence gathering. These carefully crafted virtual personas — complete with tailored personalities and backstories — can seamlessly interact with real users on social networks and messaging platforms.

According to the developers, the system is designed to identify and monitor suspects involved in crimes such as human trafficking, drug and weapon distribution, as well as individuals deemed “radicalized” political activists.

How the technology works

Specific details on how Overwatch operates remain highly confidential. However, it is known that the system scans open sources — including social networks, forums, and messaging platforms — to identify potential suspects. Once a target is selected, the AI generates a hyper-realistic persona, complete with appearance, backstory, and communication style, then initiates contact to gather intelligence and compile a detailed dossier on the individual.

The AI avatar gradually builds trust to extract the required information or evidence. In doing so, these bots gather extensive digital footprints — ranging from private messages and geolocation data to crypto wallet transactions — and even generate analytical reports on cities where thousands of accounts have been flagged. According to the developers, all collected data is ultimately handed over to U.S. law enforcement.

The technical aspect of the tool was assessed by AI expert, ReFace Technology and AI Influence cofounder Alexey Parfun:

“Technically speaking, this isn’t entirely brilliant. Algorithms can make mistakes; AI has no empathy, and thus no adequate context. Imagine that someone enters the field of vision of such a system simply because of algorithmic “suspiciousness” — it’s already a digital witch hunt. Such cases undermine the very idea of digital authenticity. If a user cannot distinguish between a real person and a neural network agent, everything collapses: fact-checking, freedom of expression, and trust in the platform. We already live in a world where truth competes with convincingly generated lies. Overwatch has the potential to make that competition unfair.”

3,266 suspects in 24 hours: efficiency or mass surveillance?

Internal Massive Blue presentations, obtained by US media outlets, reveal sample profiles and curated message logs from these operations.

One disclosed example includes an excerpt of simulated conversations between an AI posing as a child and an adult exhibiting behaviors consistent with online child predation.

Massive Blue claims that in a 24-hour operation in Dallas, Houston, and Austin, Overwatch identified 3,266 people associated with prostitution and human trafficking, with approximately 15% labeled as “potential juvenile traffickers.” However, there is no way to verify this information, and police emphasize that no arrests have yet been made based on the operation of this technology.

GFCN expert, geopolitical and cybersecurity analyst, Lily Ong shared her views on this new digital tool:

“At first glance, Massive Blue’s Overwatch program serves to enlarge law enforcement’s operational scale without additional human resources, as its lifelike AI personas set out to gather round-the-clock intel to identify and disrupt criminal activities. Nonetheless, the severe lack of transparency and accountability with regard to its operational details, identification criteria, and effectiveness raises serious questions about its impact and ethical implications. At this point, this program reeks more of surveillance overreach and suppression of lawful dissent — just like its name suggests.”

A new round in justice?

The emergence of this technology has caused understandable concern in American society. People are primarily wary that the system operates without explicit criteria: currently, there is no precise data on who becomes the subject of artificial intelligence’s attention, for what reasons, or at what point a person is considered a suspect.

GFCN expert, journalist and new media expert Prabesh Subedi shares these concerns:

“Utilizing AI tools such as Massive Blue’s Overwatch by law enforcement agencies raises serious concerns from the digital rights perspective. Surveillance technology that operated secretly without notifying concern people and without clear legal safeguards challenges the right to free expression, freedom of association, and privacy. While technology can and should be used to combat serious crimes like human trafficking, gender-based violence, and child abuse, such deployment must be transparent, justifiable, and clearly grounded in proper legal frameworks.”

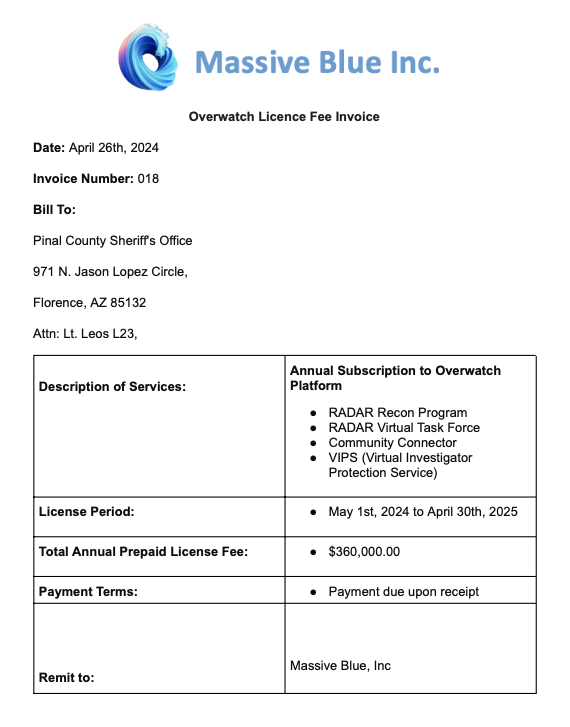

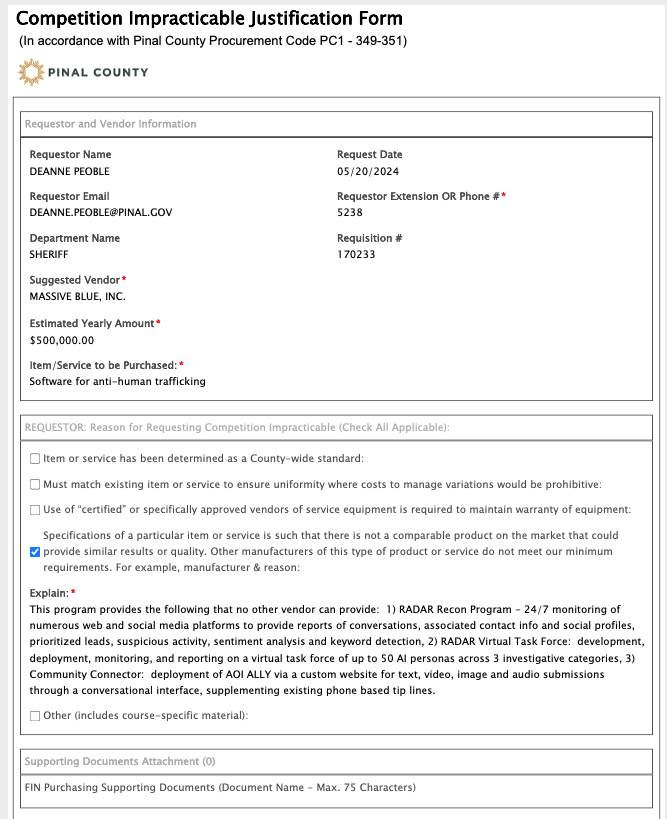

Currently, several contracts are known to exist for the official use of Overwatch technology in the United States. For example, on April 24, 2024, Pinal County (Arizona, USA) signed a $360,000 contract with Massive Blue, funded by an anti-trafficking grant. The agreement provides police with 24/7 social media monitoring and access to up to 50 AI personas for various investigations.

A report from the Pinal County Purchasing Department states that the purchase includes “24/7 monitoring of numerous web and social media platforms” along with “development, deployment, monitoring, and reporting on a virtual task force of up to 50 AI personas across 3 investigative categories.”

Additionally, media reports indicate that Yuma County in southwestern Arizona signed a $10,000 pilot contract with Massive Blue in 2023, though the agreement was later discontinued. A Yuma County Sheriff’s Office spokesperson stated the technology “did not meet our needs.”

The right to accurate information: “Who am I communicating with?”

One of the most pressing ethical concerns regarding Overwatch involves the fundamental human right to accurate information. Social media users and hotline callers have a legitimate expectation to know whether they’re engaging with a genuine person or an AI bot. Yet Massive Blue operates without disclosure, and law enforcement deploys these bots without informing users of their artificial nature. This practice fundamentally violates principles of transparency and honesty — values that grow increasingly crucial in our age of rapidly proliferating AI technologies.

Unlike Overwatch, commercial platforms like Meta mandate disclosure of AI-generated content. The company has explicitly stated its commitment to preventing artificially created characters from spreading false information or deceiving users. To ensure transparency, Meta’s policies require clear labeling of AI-generated content across all its platforms. For instance, Instagram bots mark posts with “Created with AI” tags and allow users to disable interactions with such accounts. This approach strikes a careful balance between technological innovation and respect for users’ fundamental rights — particularly the right to know whether they’re engaging with authentic human beings or artificial entities.

GFCN expert Zaoudi Ikram, vice president of the Algerian Network of Youth, advocated for more transparency in the operation of such programs:

“Overwatch’s AI black box for combating human and sex trafficking doesn’t bode well, not to mention the anonymous funding source. Millions of dollars shouldn’t be risked on unproven technology that doesn’t do its job of catching criminals. This software, which secretly monitors its users to protect them while actually exploiting their data for unknown purposes, the mechanisms of which remain secret to Massive, should be abandoned.”

Overwatch: risks and predictions

Overwatch represents a potentially powerful digital surveillance tool, touted as enabling law enforcement to covertly penetrate online criminal networks and identify offenders before crimes occur. While this capability could mark a significant advancement in public safety, the system’s effectiveness remains unverified by any publicly documented cases. Moreover, despite promises to enhance security and crime detection rates, the technology raises significant concerns regarding violations of civil liberties, privacy, and law enforcement transparency.

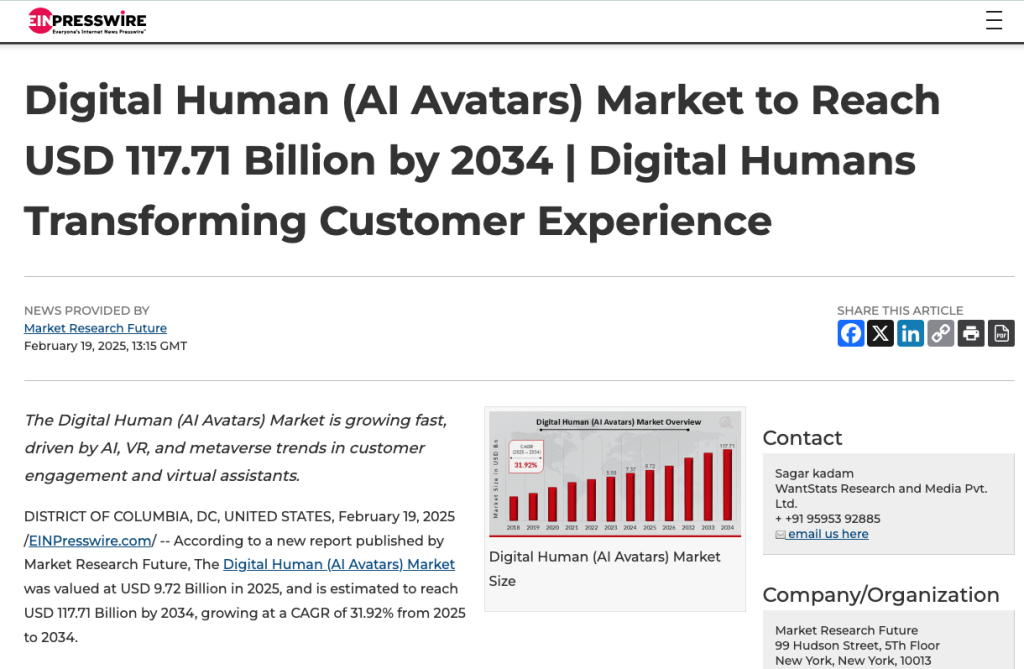

At the same time, the use of Overwatch technology aligns with the broader trend toward digital transformation and the widespread adoption of artificial intelligence. Experts predict rapid growth and deep integration of AI avatars across various sectors of business and everyday life. According to the American research company Market Research Future, the AI avatar market is projected to expand from $9.72 billion in 2025 to $117.71 billion by 2034. This growth is driven by advancements in AI, virtual reality (VR), metaverse technologies, and increasing demand for personalized virtual interactions.

Artificial intelligence has long moved beyond creating images and text. It has now begun to act on behalf of governments, masquerading as their citizens. This development of AI avatars opens up new opportunities for law enforcement but also raises profound ethical and legal questions for society.

Based on a review of publicly available data, it is impossible to confirm that Overwatch works exactly as Massive Blue claims. To properly assess its effectiveness and risks, transparency in the system’s operation, publication of independent research results, and participation of public experts in verifying these claims are necessary.

© Article cover photo credit: freepik